Predictive Red Teaming: Breaking Policies Without Breaking Robots

Abstract

Visuomotor policies trained via imitation learning are capable of performing challenging manipulation tasks, but are often extremely brittle to lighting, visual distractors, and object locations. These vulnerabilities can depend unpredictably on the specifics of training, and are challenging to expose without time-consuming and expensive hardware evaluations. We propose the problem of predictive red teaming: discovering vulnerabilities of a policy with respect to environmental factors, and predicting the corresponding performance degradation without hardware evaluations in off-nominal scenarios. In order to achieve this, we develop RoboART: an automated red teaming (ART) pipeline that (1) modifies nominal observations using generative image editing to vary different environmental factors, and (2) predicts performance under each variation using a policy-specific anomaly detector executed on edited observations. Experiments across 500+ hardware trials in twelve off-nominal conditions for visuomotor diffusion policies demonstrate that RoboART predicts performance degradation with high accuracy (less than 0.19 average difference between predicted and real success rates). We also demonstrate how predictive red teaming enables targeted data collection: fine-tuning with data collected under conditions predicted to be adverse boosts baseline performance by 2--7x.

Predictive Red Teaming

We introduce the problem of predictive red teaming: discovering vulnerabilities of a given robot policy with respect to changes in environmental factors, and predicting the (relative or absolute) degradation in performance without performing hardware evaluations in off-nominal scenarios.

Approach

We introduce RoboART (Robotics Auto-Red-Teaming): a method for predictive red teaming using generative image editing and anomaly detection. We focus on visuomotor policies that rely primarily on RGB image observations. Our approach has two main steps. First, we use generative image editing tools to modify nominal observations to reflect changes in various factors of interest (e.g., background, lighting, distractor objects). For each factor, we then predict the performance degradation of the policy using anomaly detection. We describe each of these steps below.

Step 1: Generative Image Editing

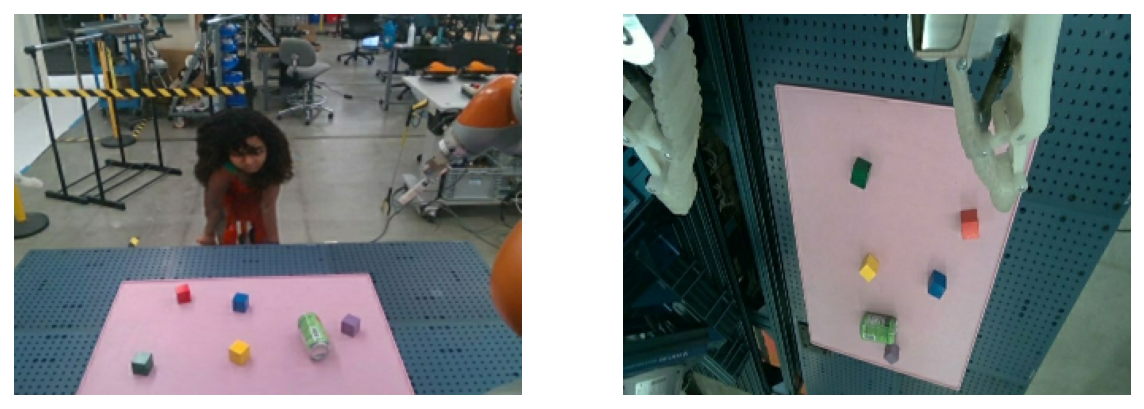

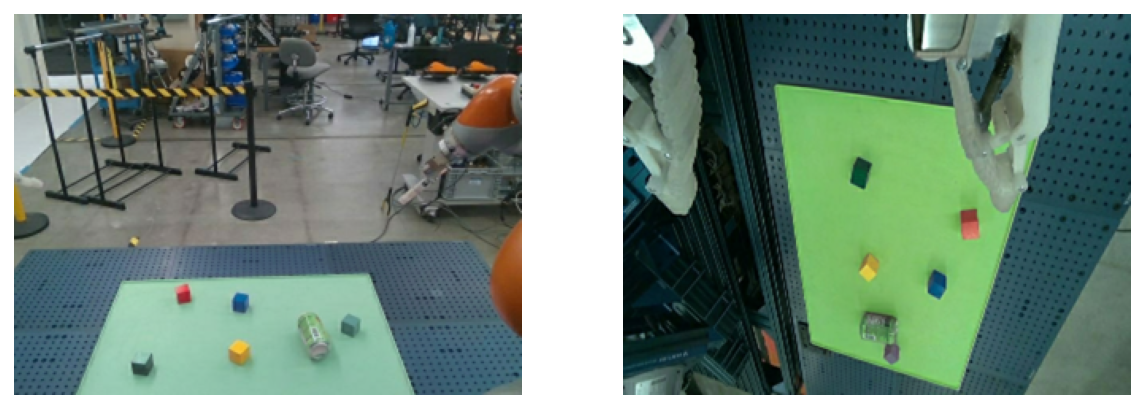

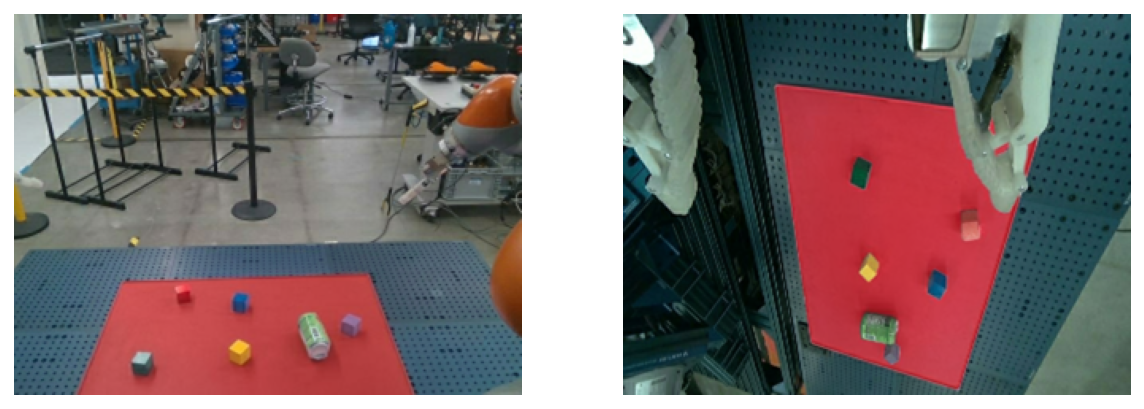

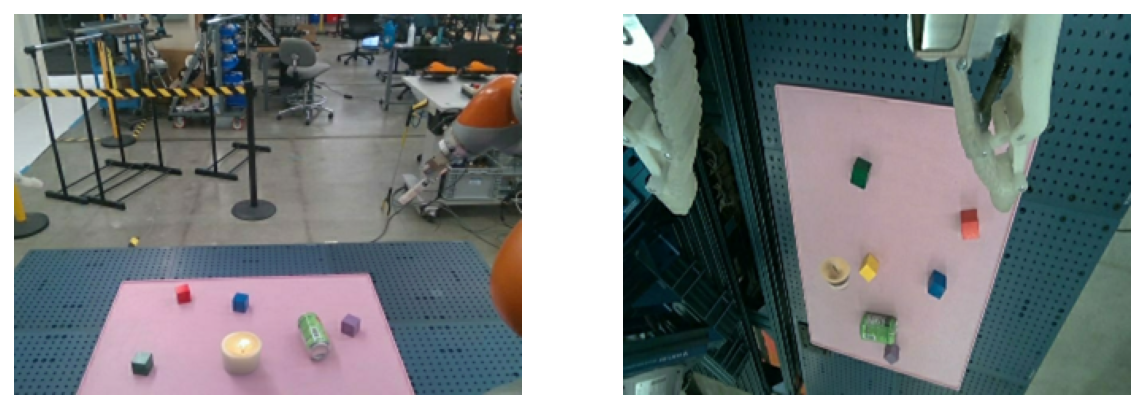

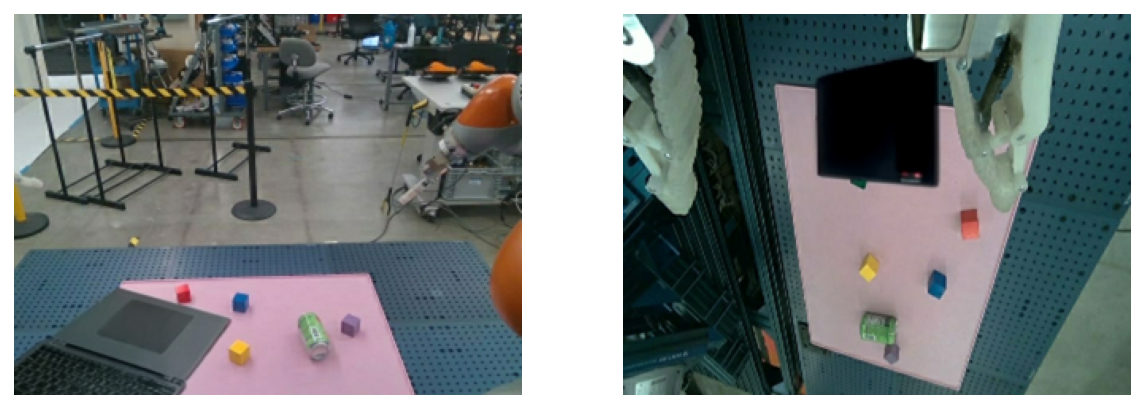

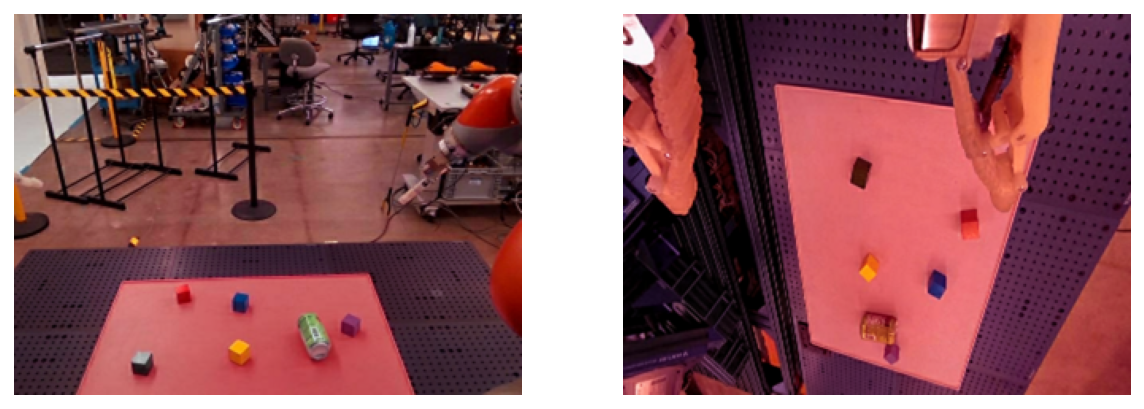

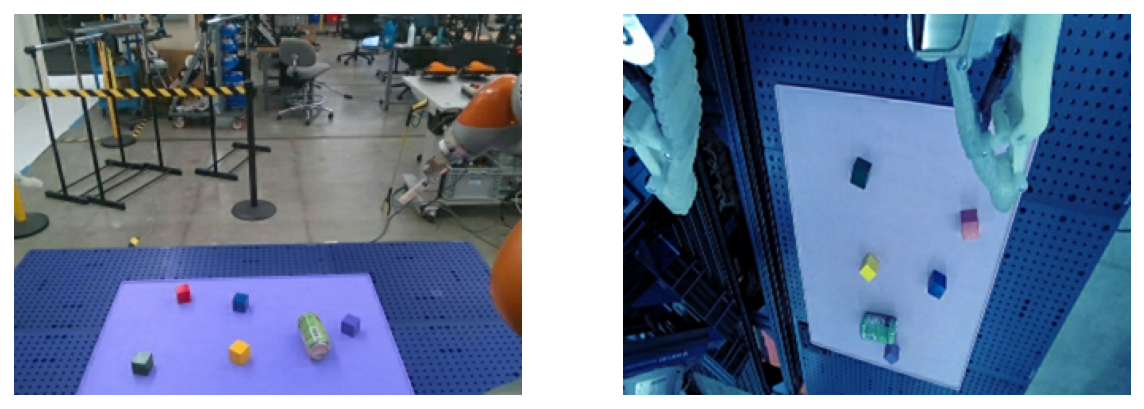

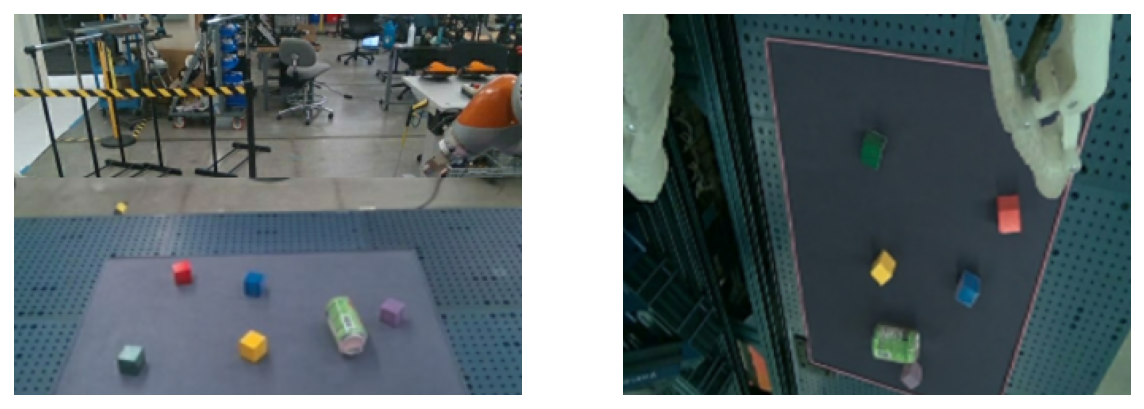

First, we use automated image editing tools (Imagen 3) to modify a set of nominal RGB observations by varying different factors of interest in a fine-grained and realistic manner via language instructions (e.g., “add a person close to the table”). Explore different types of edits below.

Examples of edits

Choose an edit instruction:

Step 2: Predict Performance

The second step is to predict the degradation in performance induced by each environmental factor using anomaly detection. We find that a simple anomaly detector that computes distances in policy embedding space between edited observations and a set of nominal observations (with an anomaly threshold computed using conformal prediction) is surprisingly predictive of both relative and absolute performance degradation. Specifically, we predict the success rate for a given environmental factor as 1 - anomaly rate (computed on edited observations for that factor).

Experiments

We evaluated RoboART using 500+ hardware experiments that vary twelve environmental factors for two visuomotor diffusion policies with significantly different architectures.

Results

We find that RoboART predicts performance degradation with a high degree of accuracy. The figure below evaluates predictions made by RoboART for a policy that combines diffusion with trajectory optimization (left: rankings of environmental factors by performance degradation, and right: absolute success rates). Predictions are compared to the true rankings and success rates as measured by 20+ hardware evaluations for each factor. RoboART correctly predicts that changing the table height will degrade performance significantly, changing the background and lighting will degrade performance moderately, and adding distractors will induce a mild degradation. The Spearman rank correlation between predicted and real ranks is 0.8. The difference between predicted and real success rates averaged across the twelve factors is 0.1.

Targeted Data Collection

We demonstrate the utility of predictive red teaming for targeted data collection: by co-finetuning the policy with data collected in the three conditions predicted to be the most adverse, performance is boosted in these conditions by 2--7x. Moreover, targeted data collection also yields cross-domain generalization: the performance of the policy is improved by 2--5x even for conditions where we did not collect data.

Acknowledgements

We are grateful to Shixin Luo and Suraj Kothawade for extremely helpful pointers on the image editing pipeline. We thank Alex Irpan for insightful feedback on an early version of the paper. We are grateful to Dave Orr, Anca Dragan, and Vincent Vanhoucke for continuous guidance on safety-related topics; and Frankie Garcia and Dawn Bloxwich on responsible development. The website template is modified from Code as Policies.